For Peercoin’s birthday, I’m hoping we can all take a moment to explore the details and the possibilities for Peercoin’s stochastic, UTXO-based mint protocol. The recent simulation breakthroughs have provided a wealth of information about how minters could ideally behave, and how we might modify the protocol to better align both minter profit interests and protocol security.

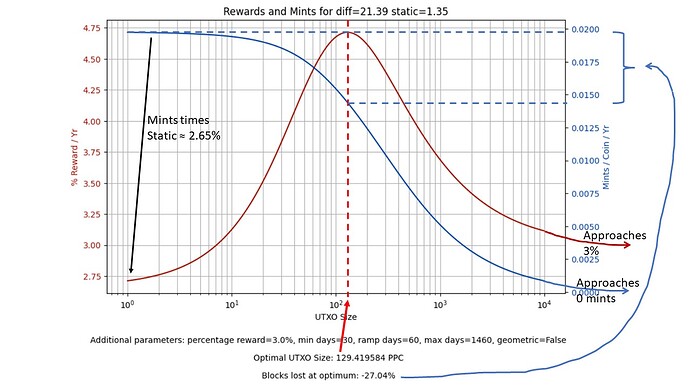

The image shown below was generated from the recent OptimumUTXO code that was written by @MatthewLM and myself. I annotated a few points of interest that may generate some discussion.

If you are a minter, think about where you might lie on this chart. What size are your utxos? Are you minting a lot of blocks and claiming your reward?

The “Optimal UTXO Size” is a key result that we can use to modify client splitting behavior in the future to target this size. This is the economic optimum, at which your outputs are expected to have the greatest return. Both the size, and the expected reward, are very dependent on protocol parameters including difficulty and total supply. However, we can calculate this number in closed form with low-computational power, so we can seek to wire this number as a target into the splitting function of the client. The result: larger rewards for minters.

One interesting quantification is the ‘blocks lost at optimum’, which compares how many blocks are minted at full splitting vs at the optimal UTXO size. This concept is a quantification of the hypothetical ‘stake grind’, in which an attacker splits their outputs into dust to try to game the system. The stake modifier is sufficiently random, so this becomes a statistical game. The percentage shown here is how much added control that attacker gains for splitting, if we assume the network operates at the economic optimum. It is a difficulty dependent parameter, but is a key concept for our network’s security. Minimizing this loss can be a target for future protocol changes. The result: higher network security.

These simulations allow us to change things like the maturation period and the block time, and see what would happen to the network parameters. We can consult these simulations for future protocol changes to see what happens to the statistics and economics as we move to different protocol concepts. Ultimately, I would like to see these simulations predict what would happen to e.g. the minter participation rate if we assume that larger ‘per participant’ rewards draw additional participants, as we generally assume happens with miners on proof of work networks. Result: Smarter tuning of network protocols to optimize blockchain security by considering economic variables.

Please feel free to ask questions or bring up additional topics of discussion. These numerical simulations allow us to model the minting process much more fully and quantitatively than we ever have before. I believe with a little bit of creativity, we could see these concepts become quite useful to the minting and development communities in the Peercoin ecosystem.

Peercoin (and its direct decendents) is the only cryptocurrency with UTXO-based minting that can benefit from the concepts discussed here. They make Peercoin stand out, not only as a pioneer, but as an ecosystem that can maintain a diverse population of minters consisting of both whales and minnows that all have a practical say in how the network operates.